I wrote a thesis, clearly demonstrating that AI will lead us to full employment. Yet, despite a clear evidence that no jobs are taken by AI, the scaremongering continues.

Let’s examine why.

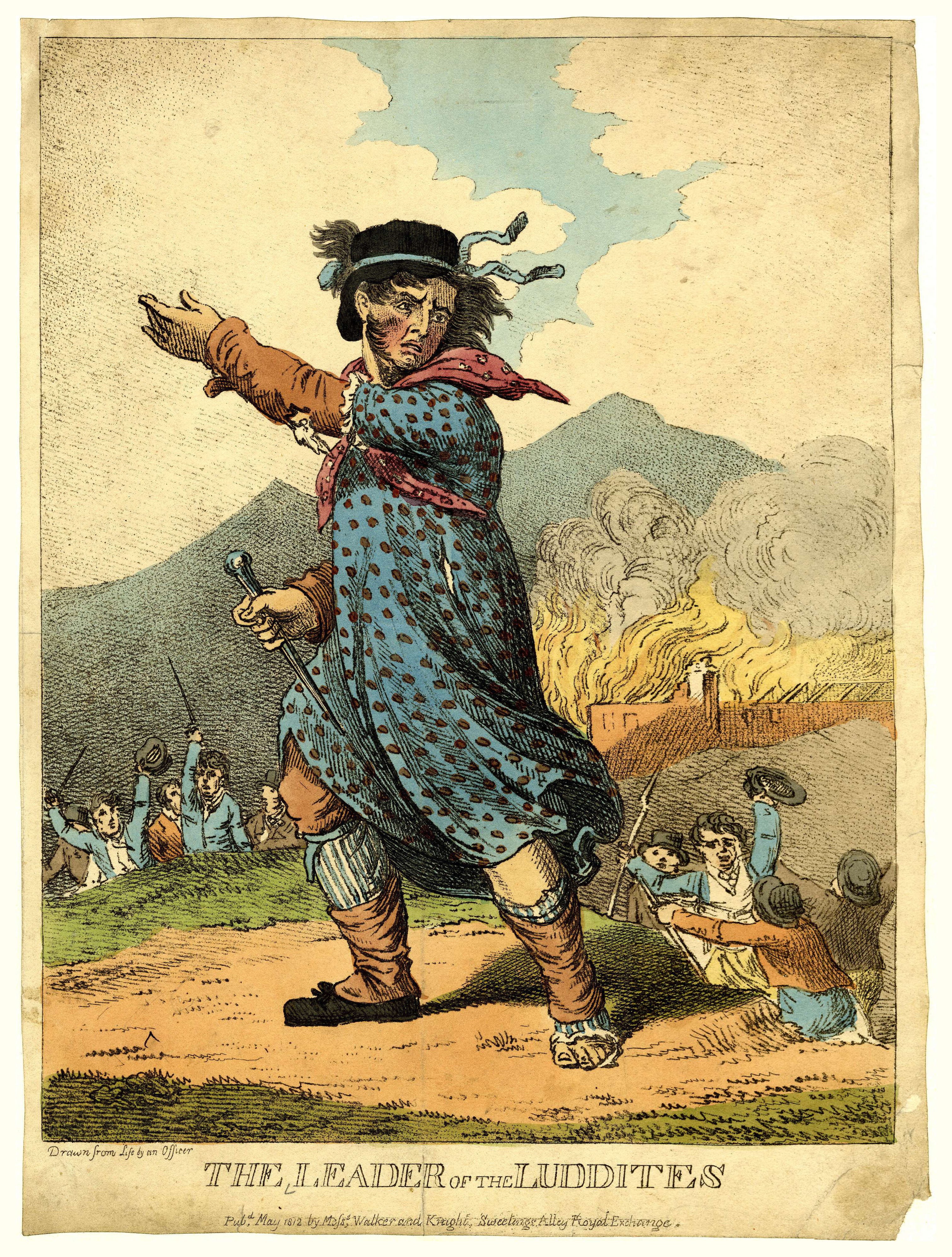

Historical Parallel: Luddites vs. Modern Tech Anxiety

Luddism (1811–16) was a movement where skilled artisans destroyed textile machinery to protect their livelihoods. Although their concerns were driven by genuine fears about economic insecurity rather than pure technophobia, the fundamental flaw in Luddite thinking was their belief that halting technological advancement could preserve employment. History repeatedly demonstrates that technology ultimately creates more jobs, higher productivity, and increased prosperity, disproving the core Luddite assumption that innovation inherently threatens economic security.Just as Luddites reacted to mechanization threatening wages, today’s “neo-Luddites” criticize AI disrupting employment and social structures.

Socialist Foundations: Linking Worker Rights to Collective Solutions

Post-Luddism, figures like Robert Owen argued that machinery, if mismanaged, exacerbated inequality. Socialists believed automation under collective control could eliminate unsafe labor and improve lives. Core socialist ideology promotes collective ownership and systems to redistribute gains from productivity, addressing inequality arising from mechanization.

However, socialist experiments, most notably the Soviet Union, Eastern European communist states, and Cuba, have consistently demonstrated systemic failures, leading to economic collapse, widespread poverty, and suppressed innovation. Despite socialist ideology promoting collective ownership and redistribution mechanisms such as Universal Basic Income (UBI), history has shown these models do not sustainably resolve inequality, instead stifling individual initiative and economic prosperity.UBI, despite its intent to provide economic stability, risks causing significant inflationary pressures similar to those observed with minimum wage increases. When the government distributes unconditional cash payments, demand for goods and services increases without a corresponding rise in productivity or supply. As consumer purchasing power artificially inflates, businesses respond by raising prices, creating a self-reinforcing inflationary spiral. Over time, continuous money injection without productivity gains undermines currency value, eroding purchasing power and economic stability. Ultimately, unchecked inflation driven by policies such as UBI could destabilize monetary systems, diminishing savings, discouraging investment and triggering economic collapse.

AI-Fueled Fear as Political Strategy

The fact that 70–88% of the UK public supports AI regulation underscores a dangerous phenomenon: policymakers harnessing widespread fears of job displacement and bias to justify expansive governmental intervention. This strategy exploits anxiety to shift power from individual autonomy and free markets to centralized regulatory bodies. Compounding the issue, prominent tech figures such as Sam Altman and Elon Musk advocate for UBI under the guise of distributing the benefits of AI-driven productivity. Critics argue this approach disguises a troubling centralization of economic and political power, effectively placing the populace in a state of dependency on government or corporate elites. Consequently, society risks losing individual agency, ceding control to regulators and influential technocrats who, under the premise of fairness and safety, could wield AI as an unprecedented tool for population control and economic manipulation.Regulation to Centralization: Government by Algorithm

Algorithmic governance represents a profound shift beyond traditional regulatory oversight, transforming AI into a direct instrument of government control. Under AI regulations, governments gain unprecedented capabilities to manage and shape societal behavior, economic transactions, and access to critical services through sophisticated AI-driven systems. This transition fundamentally alters the nature of governance, moving from representative democracy and decentralized markets toward centralized, algorithmically enforced decision-making.

Framing these regulations as protective measures against job loss, discrimination, and misinformation exploits widespread technology anxiety to legitimize expanded governmental authority. However, this protective narrative obscures the deeper consequence of regulatory overreach: increased dependency on the state. As AI regulation intensifies, market-driven innovation and individual autonomy diminish, replaced by centralized control structures. Citizens and businesses become increasingly reliant on government-approved AI platforms and algorithms, which effectively centralize data collection, surveillance, economic management, and social oversight into the hands of a few technocratic elites.Such centralization erodes the dynamic adaptability inherent in free-market systems, potentially stifling innovation, diminishing economic freedom, and reducing individual agency. Ultimately, algorithmic governance may lead to a form of digital authoritarianism, where government-controlled AI algorithms invisibly shape and restrict personal choices, market interactions, and societal norms—transforming protective intentions into profound threats to liberty and democracy.

UBI as Trojan Horse

UBI is increasingly presented by its advocates as an essential response to the imminent threat of AI-driven job displacement. While seemingly compassionate, this narrative operates as a Trojan Horse to introduce a radical restructuring of society that carries profound risks.

Rather than empowering individuals, UBI entrenches widespread economic dependency, reducing incentives to innovate, work, and engage productively in society. By creating guaranteed financial reliance on government payments, it fundamentally reshapes individual relationships with the state, shifting them from independent actors into passive recipients of state-sponsored welfare.Furthermore, this dependency opens pathways to unprecedented state intervention into personal decisions, consumption habits, and economic behavior. As UBI recipients increasingly rely on government stipends, authorities gain substantial leverage over citizens, able to influence behavior through conditional distributions, selective adjustments, or algorithmic oversight tied to social credit systems. In this scenario, the state wields direct economic influence over individuals, creating a society vulnerable to subtle yet pervasive social control.

Ultimately, UBI serves as a vehicle for expanding governmental power, reducing personal autonomy, and institutionalizing economic passivity. Instead of liberating individuals, UBI will const a deeply centralized, economically dependent society, one that prioritizes governmental authority over individual initiative and freedom, with profound consequences for personal liberty and economic dynamism.

We’ve been there. This approach will bankrupt and destroy us all.

Counter-Arguments & Safeguards

Substantial evidence demonstrates that AI will likely generate more employment opportunities than it displaces. Historically, technological revolutions, from mechanized agriculture to computing, consistently led to new industries and increased employment. Current studies reinforce this, including forecasts from the World Economic Forum, predicting a net gain of millions of jobs due to emerging sectors such as AI-assisted healthcare, precision agriculture, and personalized technology services.

Central to ensuring this positive outcome is preserving a dynamic, free-market economy. Market-driven systems adapt rapidly, fostering innovation, entrepreneurship, and competition. Those are essential conditions for creating jobs and enhancing overall prosperity. Unlike centrally planned systems, which struggle with responsiveness and adaptability, free markets allow decentralized actors, from freelancers and small businesses to startups, to leverage AI tools effectively, driving widespread economic growth and shared prosperity.Rather than resorting to universal income transfers or centralized control mechanisms, effective safeguards focus on re-skilling and up-skilling displaced workers, facilitating their transition into new industries. Flexible, temporary safety nets linked to training programs maintain incentives for economic participation without fostering dependency. Policies encouraging entrepreneurship and reducing barriers to market entry further strengthen economic resilience. Ultimately, embracing AI within an open, market-oriented framework offers the best path toward improving collective wealth and individual well-being, avoiding pitfalls of dependency and governmental overreach.